When we talk about the app development timeline, clients usually tend to underestimate it and give out an unexpected time limit to the software development houses in terms of creating a mobile application.

While each application is different from the others, the outline and the architectural framework that goes into it are somewhat similar in all cases. What differentiates them from each other is the intricate details and codes that go into the creations, the factors that make the application unique and special. This is where the actual hard work begins.

If you are wondering how long does it take to develop an app, then there is a lot that goes into the creation of an application. To make things easier for you all, we have created a complete app timeline that usually a custom software development company follows when given a mobile application development.

Developing an idea from scratch into a full-fledged and functional product is something that is labor-intensive and requires a ton of resources as well, so it requires time, attention, and complete care, which will turn it into an amazing product.

If you are wondering, how do you create a timeline for an app? Then the average software development timeline consists of the following phases, and each of these phases is locked into a specific and realistic timeframe, which are as follows:

Mobile App Development Timeline – An Overview

Ideation & Formation: 1 – 2 Weeks

Toss around ideas until you agree on the one that fits best for your brand and validate it with the help of our top consultants, and appropriate discovery workshops. You can also walk through a mobile app RFP document to better understand the requirements of a mobile app development project.

Research: 1 – 2 Weeks

With appropriate research, plan the best course of action for your app, that is sorting through your competitors and finding a unique factor with suitable functionality.

Features & Functionality: 1 – 2 Weeks

Next in line is sorting through the technical factors and selecting the appropriate platform, suite, and tools for your app to shine through the crowd.

Software development: 3 – 6 Months

With all the research, planning, and features in place, the framework and development phase begins. This includes the UX-UI design, frontend, and backend development for the entire project which may take a few months to complete altogether.

QA Testing: 3 – 6 Weeks

An app is never ready without undergoing a thorough quality assurance process where all the performance testing and load testing occur. Teams also get rid of any bugs that they may come across.

App Launch: 1 Week

The final week is spent finalizing the nits and bits of the app, and polishing the design and functionality before its release on the app store.

Post-Launch Support & Maintenance: 2 Weeks

While the application is shining through and getting all the love from its customers post-release, we keep a close eye on it to make sure it is functioning flawlessly.

7 Stages of the App Development Process

Typically, the average app development timeline is broken down into these 7 phases, these phases are covering everything, from the beginning to the end. They are as follows:

Idea and Formation: 1-2 Weeks

The beginning of all great projects is an idea. But not every idea gets to see the day’s light, and some areas experimental as they can be. But, it’s good to jump around from one factor to another, and tossing around ideas can be the ultimate way through which you can eventually reach the idea that is meant to be.

As simple as it may seem, a lot goes into the initial plot development and idea formation. You can pick any idea, but when it comes to creating that idea into a full-blown application, there are a ton of things one needs to discard. The practicality and rationality of an idea need to align with the brand, current scenario, and the need for it in general.

Research: 1-2 Weeks

Every idea seems great, until you start researching about it, and find out that you aren’t the first one to come up with it. You’ll find numerous applications on iOS and Android, along with websites that are already doing so much, and successfully, if we may say.

What makes your idea different from theirs? What unique factor do you think of bringing in with your application? If you have a sure short answer for that, then congratulation, you are ready for the next step, which is bringing that idea to life. It’s not as simple as you think it is, because creating functionality from those features that users can understand and utilize is one primary task that we need to succeed in.

At VentureDive, the appropriate time is given when it comes to the research and development of an idea. It is necessary to carefully cater to all these factors to ensure that the plan is foolproof and doe not require any more discussion or edits. Locking things here will give you a clear sense of direction in terms of what features you can introduce in your products and how you can enable those features while aligning to your brand value and product.

Features and Functionality: 1-2 Weeks

After getting all the required research and data aligned with your product, it’s your turn to decide on the features and functionality of your product. When we say features and functionality, it means all the unique factors that you want to be added to your product, all the technical elements that will make your mobile application exquisite and top-notch.

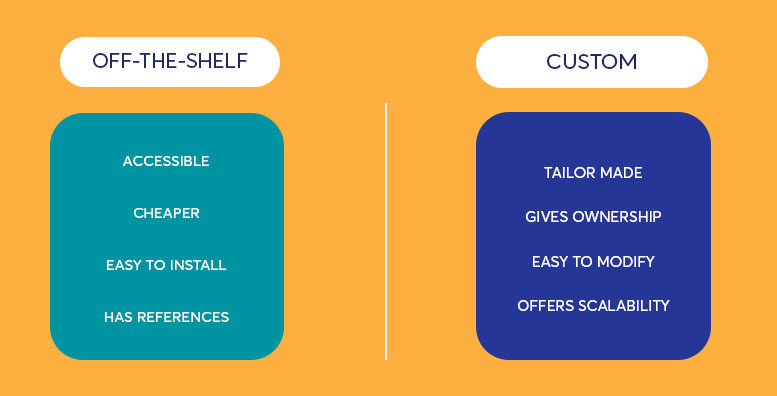

One great factor of planning ahead is the budget estimation that you can provide along with the platform you would prefer for the development of your mobile applications, i.e, Hybrid app development or native and so on. With the selected items, you are ready to move on to the next step, which is the key ingredient in our mobile app development timeline, the actual software development.

Software Development: 3 – 6 Months

After all the pondering and research comes the next big thing in the app development timeline, the development of the architectural framework itself. This is perhaps the longest process in the whole app development timeline, and rightfully so. Software development is no piece of cake, as it needs to be completed with perfection, without the possibility of any bugs or crashes which are some common mistakes that occur during development.

Most brands hire software houses based on how little time they invest in software development, and while we do understand where they are coming from, that is to save as much money as possible, it still doesn’t make sense why would they compromise the quality of the application.

Design (UX UI)

UX and UI design is another crucial phase in the software development timeline that builds an effective system where a user can smoothly navigate through all the processes and effectively complete a task.

A UX designer plans the screens with all the desired keys and buttons that will be involved in completing an action by the potential user. This includes accessing the site to complete a sign-up process or smoothly completing a purchase through various payment methods.

UI designers have the key role to make the application and all the functionalities of the application visually appealing. This factor is achieved by using different sets of color schemes, transitioning effects, animation, font sizes, and graphics, that will bind in to create not only an aesthetically appealing application but one that users can easily navigate around, promoting a great user experience along with the interface.

Backend Development

The backend development consists of all the codes and behind-the-app factors that make the application functional. And it is a long and tedious process that requires a lot of alterations and testing as it goes along the way.

Developers need to mind all the bugs that may be detected during programming and must work on eliminating them at all times. It is necessary to do so because, with a bug-ridden back-end program, the whole foundation of the application will come down crashing, no matter how hard you have worked on the architecture of the software.

A faulty back-end code or the program will topple over all the hard work that Devs have put in on their application development, and even if the mobile application does take off, it won’t survive for long.

Frontend Development

Whatever happens at the back end, is eventually displayed on the front end of any application. It’s what the users see and navigates through when they open the mobile application. Hence front-end developers work hand-in-hand with the UX and UI designers, making it a fully functioning application.

People often mistake front-end development for the basic task of making the application visually appealing, but there’s more to it than one can imagine. Front-end development is no piece of cake because with technological growth, came various tools and technicalities for a developer to get familiar with and later implement effectively on the app.

QA Testing: 3-6 Weeks

Custom software development is incomplete without quality assurance testing. This is the final and perhaps the most important phase in the software development timeline where the final product is evaluated and experimented with end to end to see if it works smoothly or not.

Quality assurance is essential, because designers and developers may not be able to see the glitches in their phases, until and unless all these elements are put together in their complete form and tested on various platforms by multiple quality assurance engineers. This activity will expose all the minor and major bugs and glitches that we may not be able to see prior to this exercise.

Furthermore, quality assurance’s role is to fully study how the users will experience the application when they tune into it, and how they will perceive things from a user’s perspective and experience.

Hence to further enhance the quality of the product, the testing phase is further divided into 3 parts which are as follows:

- Performance Testing: Going through all the features and functionality of the application along with its potential to scale and handle numerous users and load at the same time.

- Security Testing: To decipher the storage of data in the right places and to detect any date or sensitive information leakages.

- Usability Testing: Testing the app on various devices in various settings to check the ability of the app before its final release.

After a thorough review and experimentation of all the versions and using the application on different devices, it is now time to decipher whether the app is fulfilling all the criteria that were set at the beginning and whether you as an owner are satisfied with the hard work and efforts the team has put in to create something so precious, that it may change the way things work in the tech industry in coming years, once publicly released.

App Launch: 1 Week

Now that the application has gone through all the required tests, it’s time to officially launch the application and bring it to the Google Play Store or the Apple App Store. For the Apple store, the application has to go through a thorough review by the Apple engineers who test the app based on their guidelines, and if deemed fit, it will appear on the App Store in a couple of days or even a month.

Post-Launch Support & Maintenance: 2 weeks

The final role of any custom software development company is to keep a close eye on the reviews and ratings of the application. The required feedback will allow the company to maintain flow and remove any unexpected bugs immediately. These lessons and reviews will help the development firm to curate an effective expansion plan for the next fold.

Mobile app development timeline – Conclusion

As far as we can tell, this blog has pretty much covered all the factors and answered all the questions that revolve around how long does it take to develop an app and walked you through all the factors and features one needs to look out for when researching about the complete software and app development timeline.

FAQs for Mobile app development timeline

Normally a time frame of 8-10 months is a suitable number to completely develop a mobile application. From the initial idea development to the final deployment of the product.

The 5 core phases of app development are the idea and research, app development, UX UI design, quality assurance, and the final deployment.

The front-end development of any application mostly takes about 6-8 weeks.

Average app development can range from anywhere near $10,000 to $100,000 or even more, depending on your budget, requirements, and the time it takes to create the app.